Building A Data Pipeline For Business Analytics

Introduction

Business analysis depends on the easy flow of exact data. By transporting data from its source to a destination where it may be used for analysis, a data pipeline guarantees this. It gathers, processes, and sends data free from delays. An effective pipeline removes mistakes and saves time. It frees businesses to focus on insights instead of labour-intensive data processing. Remaining ahead in a data-driven world calls building a robust data pipeline, which is also essential for sound judgments. Many business professionals look for Business Analytics Courses in Delhi so that they can acquire practical skills and further their careers.

Building A Data Pipeline For Business Analytics

Business analysis depends on timely and precise data. Moving data from its origin to a location where it can be evaluated allows a data pipeline to accomplish this. It guarantees that analysts get the correct information on time. A well-designed pipeline turns raw data into useful knowledge. Furthermore, lowering human effort and mistakes. Businesses that spend in effective pipelines stay competitive and enhance decision-making.

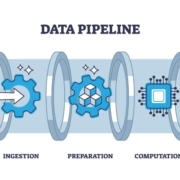

1. Understanding a Data Pipeline

A data pipeline is a succession of actions moving data from one place to another. It begins by gathering raw data from several sources. Databases, APIs, logs, or files might count among these sources. The data is processed and transformed through the pipeline to make it fit for analysis. Then it loads the data into a destination such a data warehouse or analytics dashboard.

2. Data Collection

Data gathering marks the beginning of pipeline construction. This phase gathers data from many systems. A business may gather sales information from an e-commerce site. A survey tool may also gather customer comments. The gathering procedure has to be dependable. The study becomes incorrect if there are missing data. Many companies automate data gathering using connectors or APIs. Many learners explore Top Courses for Business Analyst to understand data modelling, reporting, and decision-making techniques.

3. Data Transformation

Rarely ready for study is raw data. It has to be transformed and cleaned. Duplicates are removed and errors are fixed in this process. Additionally standardizes currencies and dates among other formats. Data conversion can also enhance knowledge. Adding a product category to sales data, for instance, helps with trend analysis. Tools like Apache Spark or AWS Glue manage this process effectively.

4. Data Storage

Once the data has been cleaned, it has to be kept. Companies usually employ data lakes or data warehouses for this process. Tables in a data warehouse hold organized data. Both structured and unstructured data may be kept in a data lake. The kind of analysis dictates the decision. Dashboards and reports gain from warehouses. Advanced analytics and machine learning benefit from lakes.

5. Data Loading

The pipeline next loads the modified data into its final destination. This could be a visualization tool like Power BI or Tableau. Alternatively, it could be a machine learning algorithm predicting using the data. The loading phase has to be rapid and dependable. Batch loading is used by a number of companies for big datasets. Decisions requiring immediate data call for real-time loading.

6. Monitoring and Maintenance

One-time setups are not pipelines. Regular monitoring is needed on it. The pipeline can break if a source changes its format. Performance could suffer if the volume of data increases. Early detection of problems is made possible by businesses using monitoring tools. They additionally execute tests to guarantee precision. Maintenance preserves the pipeline’s efficiency and avoids expensive errors.

Here’s a table each stage of the data pipeline, the tools used, and the outcome at each step:

| Stage | Description | Example Tools / Technologies | Outcome |

| Data Collection | Gathers raw data from different sources like databases, APIs, or logs. | Apache Nifi, Talend, Fivetran, APIs | Raw data collected from multiple systems. |

| Data Transformation | For analysis, data transformation cleans, normalizes, and enhances data. | Apache Spark, AWS Glue, dbt | Clean, structured, and enriched data. |

| Data Storage | Stores processed data in a centralized location. | Snowflake, Amazon Redshift, Google BigQuery, Data Lakes | Organized and easily retrievable data. |

| Data Loading | Moves data to visualization tools or ML models. | Airflow, Informatica, Power BI, Tableau | Data available for reports and dashboards. |

| Monitoring & Maintenance | Keeps an eye on performance and track pipeline health. | Grafana, Prometheus, CloudWatch | Reliable, up-to-date, and error-free data pipeline. |

Conclusion

Good data pipeline is crucial for business analytics. It promises data transfer from source to destination free from interruptions. It converts raw data into decision-driving insights. Automation saves companies time and lessens errors. Working professionals prefer a Business Analysis Online Course to learn at their own pace and apply analytics in real projects. Investing in a robust data pipeline helps businesses build a solid basis for expansion and innovation since they may react more quickly to market developments.